11.11.2015

11.11.2015

What we've learned about editing in VR360?

Is editing possible in virtual reality? What principles and methods can be used to ensure that the audience's perception of virtual reality is not destroyed? We have decided to share our experiences on this subject by using our VRability project as an example.

NB. This material was prepared by Georgy Molodtsov, co-author and creative director of the VRability project, which is implemented together with the Eyera Team as a non-profit social project. This material may only be used by special permission from the author.

Rapid development of 360 degree spherical video filming technology has become a topic of interest to both technical and creative specialists. This is the most outstanding thing to have happened in cinema technology and cinematic language since the appearance of light cameras, which in turn spawned the “New Wave” of cinema - long-term observational cinema and the modern “live camera” video style.

Rapid development of 360 degree spherical video filming technology has become a topic of interest to both technical and creative specialists. This is the most outstanding thing to have happened in cinema technology and cinematic language since the appearance of light cameras, which in turn spawned the “New Wave” of cinema - long-term observational cinema and the modern “live camera” video style.

We decided to dive into this world of new formats and technologies. Our primary goal wasn't as much about creating a profitable project, as it was about understanding this brave new world.

The VRability project was created with two main goals in mind:

- to use the most advanced technology available to reach important social goals

- to research and develop new forms of cinematic languageHaving combined our extensive creative and technical knowledge, we began researching the possibilities offered by spherical video, using all of our free time to expand the horizons of this new direction and its amazing potential.

Virtual reality video demands courage, experience, and patience. Of course, it also requires funding, but we have luckily encountered charitable support from people and companies who help us for free or offer significant discounts to help us reach our important social goals. Among them are: GoPro (Yuma), FIBRUM, Kolor, “Katarzyna”, Tennis park, Sokolniki Ice Palace, Dmitry Agutin (producer and co-director of the documentary film about our project), developed Denis Ivanov, and the protagonist of our first film Maxim Kiselev, as well as his family and tennis and ice-dancing partners.

The idea of showing the potential of wheelchair-bound people was not accidental – Georgy had worked as a film director with “Perspectiva” - an organization for people with disabilities. He had created social advertising campaigns and films about people with disabilities. Stanislav understood the problem of limited mobility first-hand – after an accident, he lost his mobility for over six months, and VR was one of the methods used to stimulate his brain activity and help him regain nerve sensitivity in his body. The most widely used concept of watching VR (sitting in a rotating chair) takes the viewer one step closer to the experience of someone in a wheelchair.

Here is an introductory video about the project:

Many technical solutions were found during the project's initial stages: vests, body-mounts, cable-wires, special fixtures, helmet-mounted cameras with subsequent re-editing to show the helmet in the shot. It's important to understand that editing is not simply the putting together of already filmed materials, it's the careful planning of shots and their interrelationship, rhythm and balance before filming even begins.

What we typically see online in VR360 is mostly one-shot static scenes. Their style and stage of development is reminiscent of the first experiments in film - “The Arrival of a Train” and “Workers Exiting the Lumiere Factory”. It's static. It's a wide shot.

Internet and conference discussions always claim that there is no editing in VR, there's no such thing as a “shot”, there can be no storyboard. That we mustn't restrain a person's right to choose where to look. But, again, if we were to look at VR in the overall cinema history context, David Griffith's methods of including editing in wide shots were also seen as impossibly revolutionary.

We have proven that there is editing in VR360.

But instead of “shot” we say “orientation”.

Instead of storyboard – composition and location of objects in relation to frontal orientation.

Close-up, wide shot – all of these can be used in relation to the location of the main object and his relation to the current orientation (shot/camera)

We'd like to discuss the major “discoveries” that we feel are important for our future work in VR.

1. Composition Cut

VR has a lot of technical specialists. However, we feel that the persistent view that there is no editing in VR has frightened away many professional editors from the medium. When we started editing the video clip of our protagonist's performance in wheelchair ice-dancing, we saw very quickly that traditional editing methods work great in VR. First and foremost – editing based on the object's location in the shot:

We have frontal orientation – how a person sat down to watch the film. We have a key active object in the shot. Let's say he took a step to the left, and the person turned his head slightly in the same direction. The cut is – the protagonist in the wider shot ends up in the same location in the shot, allowing the viewer to retain story continuity.

Traditionally, stitchers in VR centralize an event in relation to the horizon, without accenting active moving objects.

But if one works with color, scale and mise-en-scene (the protagonist's movement trajectory) – more editing possibilities come into play.

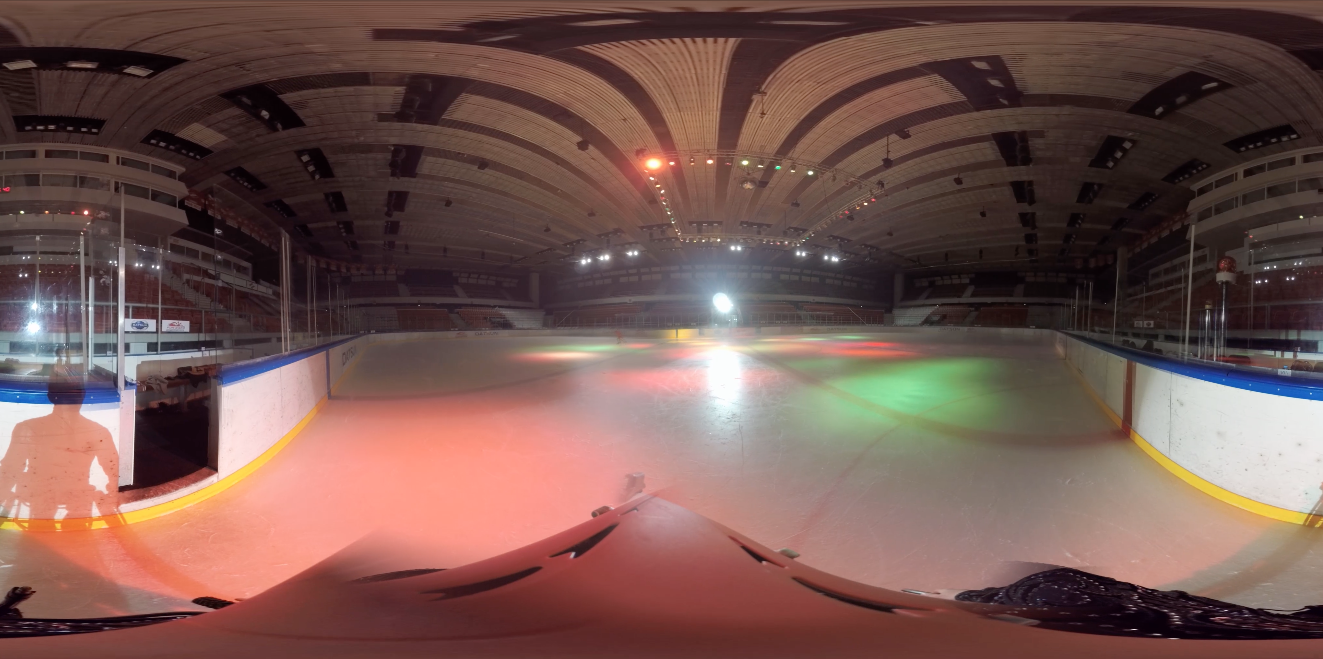

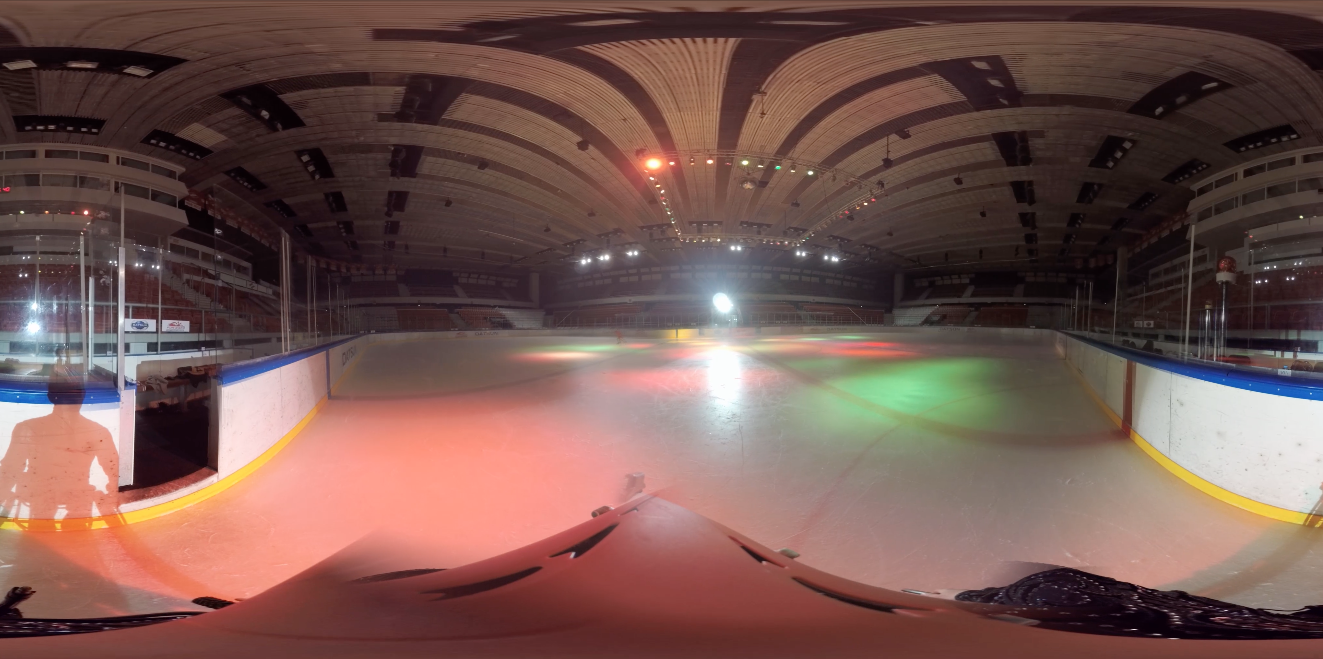

In our case, the camera hooked to a cable-wire flew over the ice, leaving the protagonists on the left and behind. That's the wide shot.

The next shot is from the main protagonist's helmet. However, instead of showing him looking straight ahead (since the viewer is currently looking left-and-back), we change orientation and make the center of action in that same left-and-back point, where it was in the previous shot

gradually returning the action zone to the front-and-center orientation.

We even crossed the line a little to the right and then cut it with another wide shot (static shot from the cable-wire camera), - which was active in the same area.

2. Cloud Cut

Cloud Cut – is a widening mask cut between episodes that gradually fulls the visual sphere. It's a seamless transition between scenes that maintains orientation.

An example of a Cloud Cut:

Of course this idea has been on the surface and can be seen in other videos. However, the first CloudCut Vrvideo that we'd uploaded earlier appeared two weeks before the release of the U2 video done by VRSE. On one hand, it's a pity that we didn't get a chance to tell people about it before. On the other hand, the fact that the largest cinematic VR company has used a similar idea in their work tells us that we're on the right track. Of course we cannot compete with Chris Milk as far as PR, but the fact that we were published online way before the premiere of their video does speak to a certain level of our ideas!

This example shows that the composition of this episode gives the viewer the direction from which the cloud cut will appear. The editing is determined not only by how you combine the already filmed material, but largely on how you decide to combine it before you start shooting.

3. Close-up Cut

We often hear that one cannot manipulate viewer behavior in VR. But in film we have special effects, editing, manipulations, etc! Why don't we make all movies using just establishing shots – then we wouldn't manipulate the viewer either! VR works the same way that traditional cinema does. The moment the camera is turned on, the location of the tripod, the choice of object – these are all manipulations. We want to tell a story and VR is our chosen method for telling that story. We don't prevent the viewer from looking left or right. But we do call their attention to our story.

In the shot, a girl is spinning with the protagonist. Traditional VR would have used a static camera and we would have had to spin on the chair along with the girl. Having “tied” the girl to a central orientation, there is no reason for you to spin along with her – she is always in front of your eyes, but the changing background simulates movement, and if you look down, you can see how much effort this is for the protagonist – to spin the wheelchair.

4. Bodymount Shot

Even though this is a technical solution, it still has to do with editing – just in-the-shot editing. The camera is on a bodymount. The orientation is in the direction of movement. We turn our head and we see the face of a person walking behind us. In just one shot we see his subjective viewpoint and his face, reaction, emotions.

Technical solutions will eventually reach the point of getting rid of the equipment in the shot – it's a question of testing. But the creative solution has already been found.

4. Action Shots

Flying on cable-wires, mounting the camera to a helmet, a wheelchair, a car and so forth – it's all done for the sake of dynamics. Difficult, hard to set an even horizon, occasionally producing a “car-sick” effect on the viewer – but dynamics none the less. It takes the viewer out of his typical role of passive audience member and guides him in the right direction. But it's not the only element. Any dynamics requires rhythm and “breaks” - static shots that allow one to take a break and look around.

5. Subtitles

Our colleague Denis Ivanov has developed an application for GearVR, which turns on subtitles from an srt-file in the foreground. This is ideal for the project's international applications. We did however notice that with subtitles, some shots will have to be made less dynamic and more stable.

Static foreground that remains static regardless of where the viewer turns their head, requires calm movement. So, we decided to use an English-language soundtrack for the more dynamic parts, and only use subtitles when the sound is set to a more static image.

6. Shot length

Having reviewed the dynamic editing and discussed it with our colleagues, we came to the conclusion that the most comfortable minimal shot length is 15 seconds, out of which the shot is shown in it's entirety for 13 seconds and the second at the beginning and at the end is used for the transition. We will try to achieve this in subsequent films. However, this is just an observation we've made, not an axiom. Any rule can be broken, if one knows why it should be broken.

7. The shot's composition with an object parallax in the foreground/Helmet Recovery

We actively use a rig mounted on a helmet, as well as a vest. So far, we have not recovered all the materials, but thanks to Mettle SkyBox Studio the helmet recovery process has become much quicker.

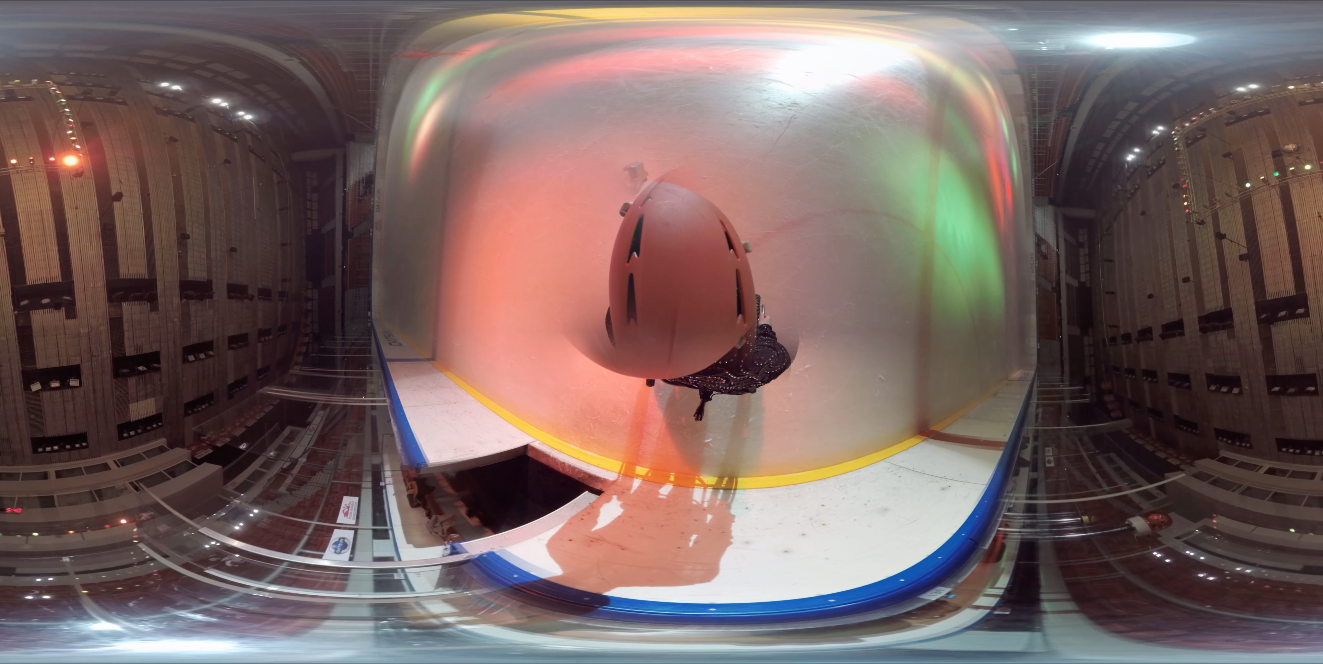

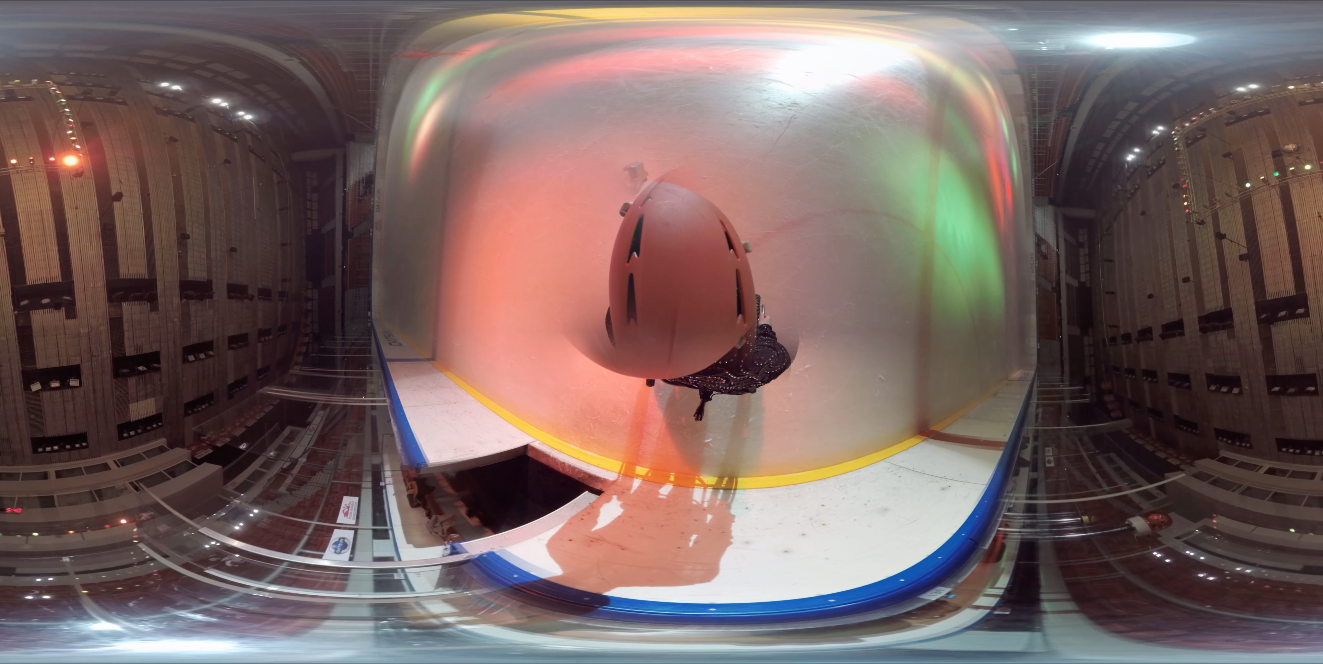

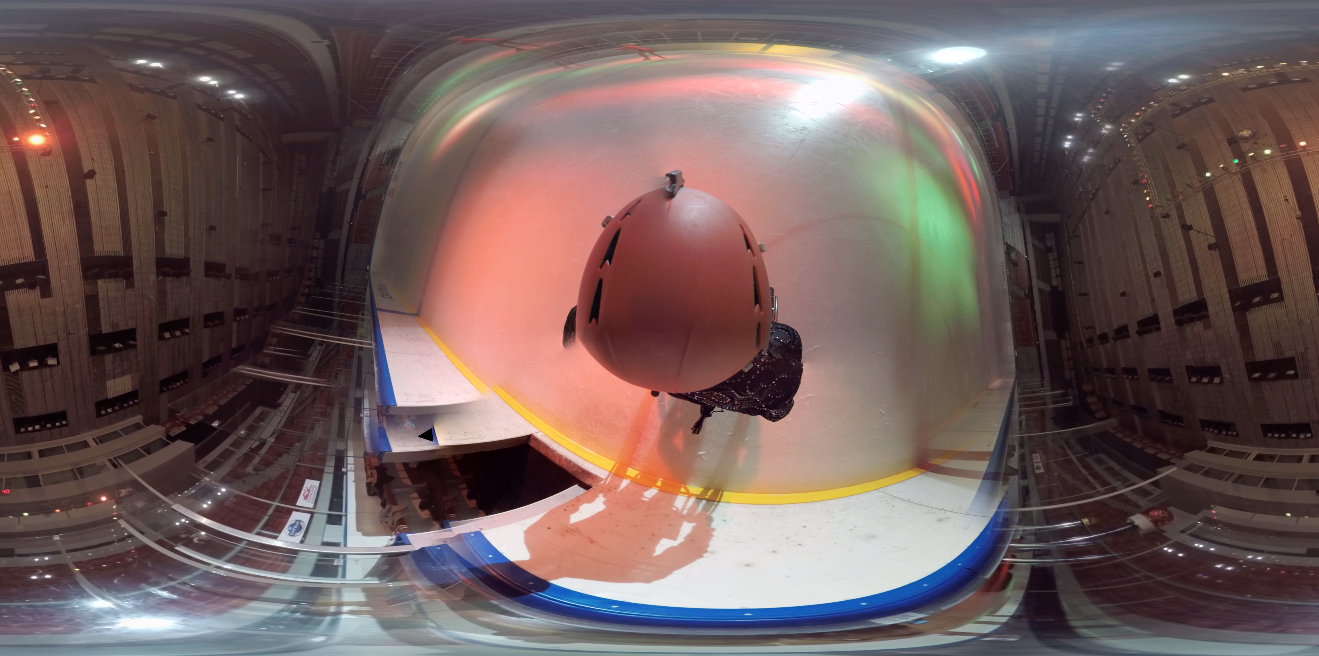

1. This is the material with the right horizon, but a “broken” helmet:

2. This is what it looks like from above:

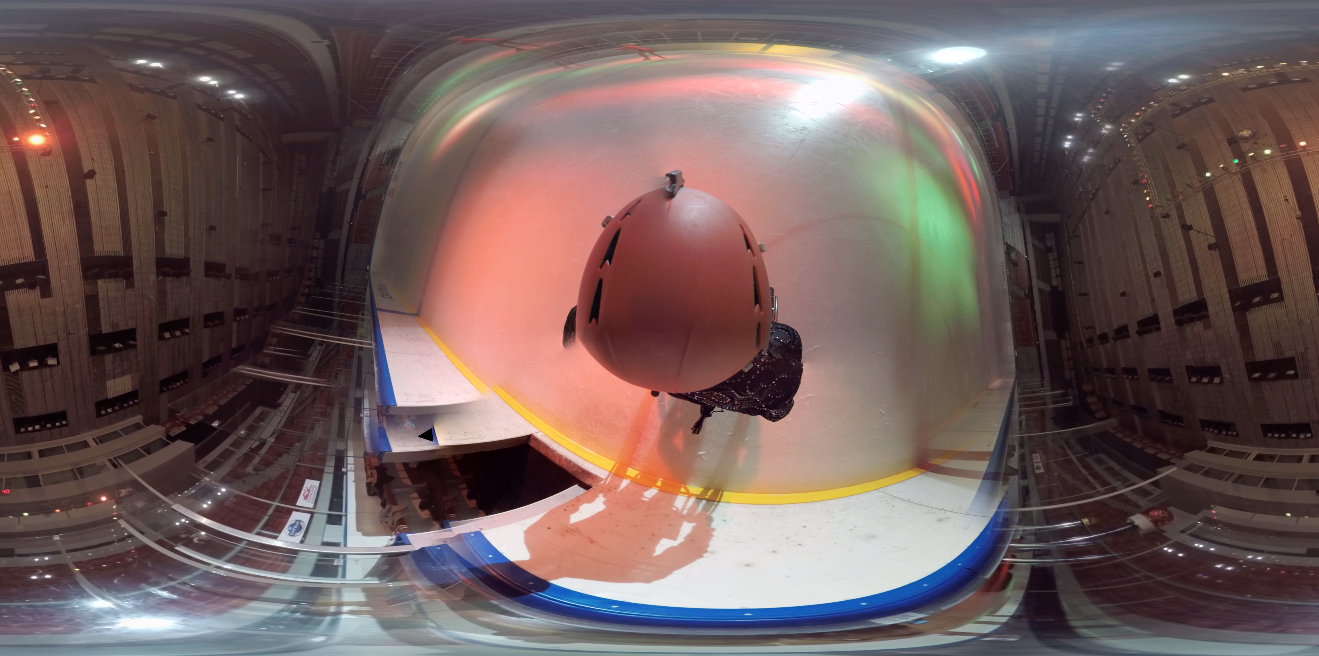

3. Re-stitching the panorama so that the helmet remains whole (and we don't care about the other stuff), we get this wonderful picture:

4. After Effect + Ìasks + Skybox Studio and we get:

A very quick process, but only if one understands the goal. The goal could be to show subtitles, or to show an aesthetically pleasing shot. A standard patch will not work with a static shot – reflections, changes in direction and closeness of the object to camera point (for example, if you bend down, that point changes). You saw the example with the recovered helmet earlier

Finally, I'd like to say that the art of editing is a combination of methods based on the psychology of the viewer's interpretation of visual information. A balance of close-up and wide shots, statics and dynamics, color solutions or separations – all of these are aspects of editing that are acquired with experience of working in traditional film.

We have a lot more to explore – audio editing, graphics, and using interactive elements. And of course, we anticipate improvements in the quality of filmed materials and their exhibition. When this technology becomes as available as VR360 is available to viewers on their smartphones, we have to be ready to implement creative methods, to tell stories and to draw the viewer into the story. We don't need to wait for a technical revolution – it has already happened. Our goal is to allow this technology and new creative approaches to evolve in order to reach the sole goal – of ensuring that what you create impresses the viewer, changes the viewer, and touches his heart and soul.

Georgy Molodtsov

About the VRability project

VRability is a non-commercial socio-cultural project that uses virtual reality video to motivate people with disabilities to be more active. The project's goals are cultural and educational.

About the author

Georgy Molodtsov – director, producer, creative director of the VRability project, creative director of the “Social Advertising Laboratory”, vice-president of the Documentary Guild, selection committee member of the Moscow International Film Festival. Author of over 20 social advertising campaigns, that have received over 50 prizes at advertising and film festivals. Graduate of VGIK (BA), MA in Film and Video 2015. In 2009-2010 taught “Basic Editing” at the Higher School of Economics and VGIK. Holds master-classes and creative laboratories on creating and developing social campaigns.

Contacts: info@vrability.ru

Rapid development of 360 degree spherical video filming technology has become a topic of interest to both technical and creative specialists. This is the most outstanding thing to have happened in cinema technology and cinematic language since the appearance of light cameras, which in turn spawned the “New Wave” of cinema - long-term observational cinema and the modern “live camera” video style.

Rapid development of 360 degree spherical video filming technology has become a topic of interest to both technical and creative specialists. This is the most outstanding thing to have happened in cinema technology and cinematic language since the appearance of light cameras, which in turn spawned the “New Wave” of cinema - long-term observational cinema and the modern “live camera” video style.